Our company name celebrates the connections that we all share. The elephants in our logo represent the unity and dignity that we try to embody.

We are not trying to be the fastest or the cheapest, we strive to do consistently excellent work and help with whatever transitions you are going through. We use respect, determination, good humor and technique to get you to the other side. Thank you for giving us the opportunity to do work that we are proud of.

About Our Team

Cal T License #189403

Committed to excellence with over 100 happy clients on Yelp and Google

We can do professional packing, moving, assembly, unloading, disposal, and more

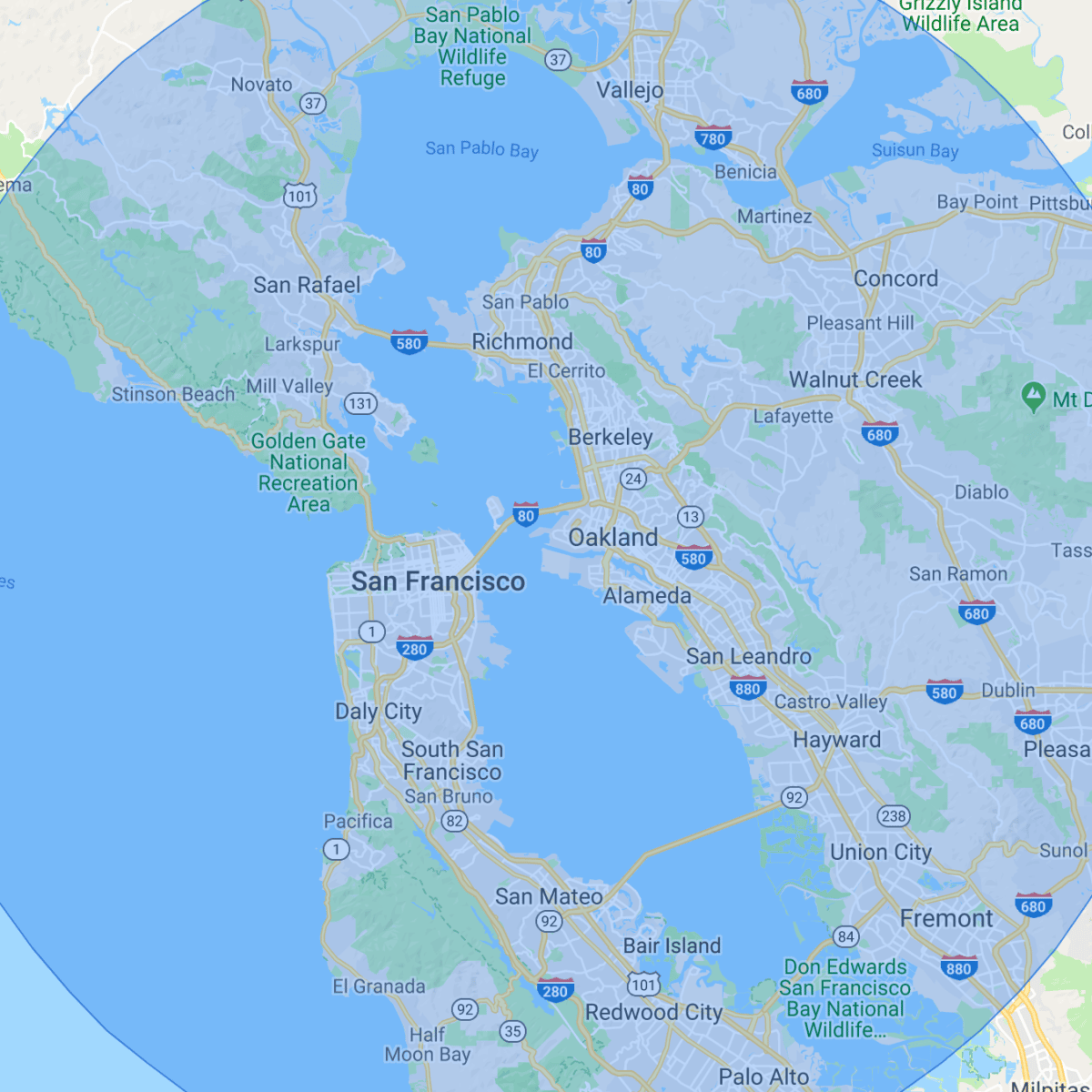

We work to make our company, Oakland, and the Bay Area stronger.

For more information, please call us at (510) 839-5239 for the fastest response. Thanks for giving us the opportunity to do work that we are proud of.

3955 Whittle Avenue, Oakland, CA 94602

© 2022 Big Family Movers